Moderation and censorship in the Web3 era

How do we build scalable automated content moderation without centralized unilateral censorship authorities?

The Web content moderation landscape

The recent killings in Buffalo, New York, and their alleged connections to the anonymous imageboard 4chan have reignited the debate over Internet moderation and censorship and the responsibility of social media platforms and infrastructure and network service providers to censor content that is deemed hate speech, terrorist propaganda and incitement, graphic violence, conspiracy theories, misinformation and other categories of media and content deemed illegal or objectionable.

Censorship and moderation of content are critical issues for existing Web 2.0 platforms, yet up to the present time, they have not received much attention for Web3.

One of the defining features of today's Web is the predominance of user-generated content (UGC). Earlier in its history users accessed content on the Web that was mostly created and curated by professionals, like in traditional encyclopedias. Publishing content required access to resources like servers, network connectivity, and cameras, and required programming and design and production skills to create the pages and images and videos, and so on.

Today all kinds of writing, image, art, music, dance, photo, and video content can be published easily via social media platforms like Facebook or TikTok, or YouTube. Corporations make money by providing a platform for users to share and distribute their content and then profit off of selling users' data and preferences and attention to advertisers.

The different kinds of UGC. From digital.gov/2016/07/11/how-to-leverage-user..

The different kinds of UGC. From digital.gov/2016/07/11/how-to-leverage-user..

User-generated content is simultaneously essential to profit for these corporations and one of their greatest liabilities. Thus content moderation has become an essential activity for platforms that distribute user-generated content as an implementation of the policies and processes and governance mechanisms that deal with detecting and removing illegal and objectional content and, nominally at least, looking after the safety and well-being of platform users.

The owners of these digital platforms must conform to laws that deem certain content illegal like copyright violations or content that is dangerous like malware. While not illegal, organized disinformation and propaganda campaigns run by state actors can have serious effects on society and leave users frustrated, angry, and reluctant to use a platform. To ensure growth (and more importantly for the platform's corporate owners, increased profits) platforms need to provide a space that encourages users to create. Abuse, hostility, pile-ons, doxxing, all affect a user's desire to use the platform.

These content moderation policies and processes must be supported by automated tools powered by machine learning to deal with the scale of moderation required, which for platforms like Facebook can be of the order of billions of users, in order to reduce time-consuming human moderation. Moderating user-generated content is one of the most difficult, labour-intensive, emotionally draining, and politically-charged tasks the human administrators and operators and moderators of social media platforms must perform.

The social media giants like Facebook, Twitter, Twitch, together with platform and infrastructure and hardware and service providers like Google, Microsoft, Amazon, and Cloudfare, are all corporations who can unilaterally define terms of use and declare who is in violation of these policies. Owners of digital platforms and service providers face substantial pressure from their respective governments, journalists, activists, and individuals to remove or censor certain content.

3 years ago Cloudflare terminated its services to the anonymous imageboard forum 8chan over their alleged connections to mass shootings in El Paso, Texas and Dayton.

Cloudflare claimed its motivations for ending its services to 8chan were purely ethical, based on their application of "The Rule of Law"

...our concern has centered around another much more universal idea: the Rule of Law. The Rule of Law requires policies be transparent and consistent. While it has been articulated as a framework for how governments ensure their legitimacy, we have used it as a touchstone when we think about our own policies.

But many were skeptical. Some pointed out that Cloudfare's actions were probably motivated by its upcoming IPO more than any ethical concerns...even its statement on 8chan and the shootings seemed to be in part a covert marketing brochure

We have been successful because we have a very effective technological solution that provides security, performance, and reliability in an affordable and easy-to-use way. As a result of that, a huge portion of the Internet now sits behind our network. 10% of the top million, 17% of the top 100,000, and 19% of the top 10,000 Internet properties use us today...

Regardless of Cloudfare's motivation, the ability of network service providers like Cloudflare to unilaterally deny service to a public Internet forum had the effect of reinvigorating interest in decentralized infrastructure and network service technologies.

One of the many benefits of decentralized storage technology like IPFS is its ability to resist this kind of unilateral censorship. When you store a video or file on IPFS it gets split into smaller chunks or nodes, cryptographically hashed, and assigned a unique representation called a CID. When anyone in the world requests a CID from an IPFS node, IPFS nodes communicate among themselves in a peer-to-peer fashion to try to figure out which are the closest nodes that contain a copy of the data that corresponds to the requested CID, and that data gets sent to the node making the request on behalf of the user. There is no single server address that can be blocked or DDOsed or taken offline and no centralized directory or index that can block a request for a CID.

![img]miro.medium.com/max/1310/0*1rGbLPMxd_6CwFCJ)

This approach has obvious advantages and disadvantages. IPFS may be able to effectively resist censorship, but what happens when people upload child sexual abuse material (CSAM) or abuse others using revenge porn or doxxing?

The centralization of social media platforms that host UGC has one characteristic: moderation is done solely at the discretion of the platform owners. The policies of Facebook and Google et.al define what is acceptable content and what is not and the power to remove content (or users) is centralized into a single authority.

But how is decentralized moderation possible?

Decentralized moderation means a scenario where this centralized authority that defines the rules and standards for user-generated content and has an over-reaching power to remove any content that does not follow the rules does not exist.

Moderating IPFS

One approach to moderating IPFS content, pioneered by Cloudfare which operates one of the most widely-used public IPFS gateways, is a patch to the mainline IPFS code called safemode which allows gateway operators to block blacklisted CIDs at the gateway level, stopping users from ever retrieving a blacklisted CID via HTTP regardless of which node has the CID data pinned.

This approach though unquestionably effective, reintroduces the spectre of centralized censorship by network service providers, which is one of the things IPFS is supposed to prevent. Shouldn't an IPFS node operator have the right to decide what content can be hosted by their node and shouldn't HTTP gateway operators like Cloudflare respect that decision?

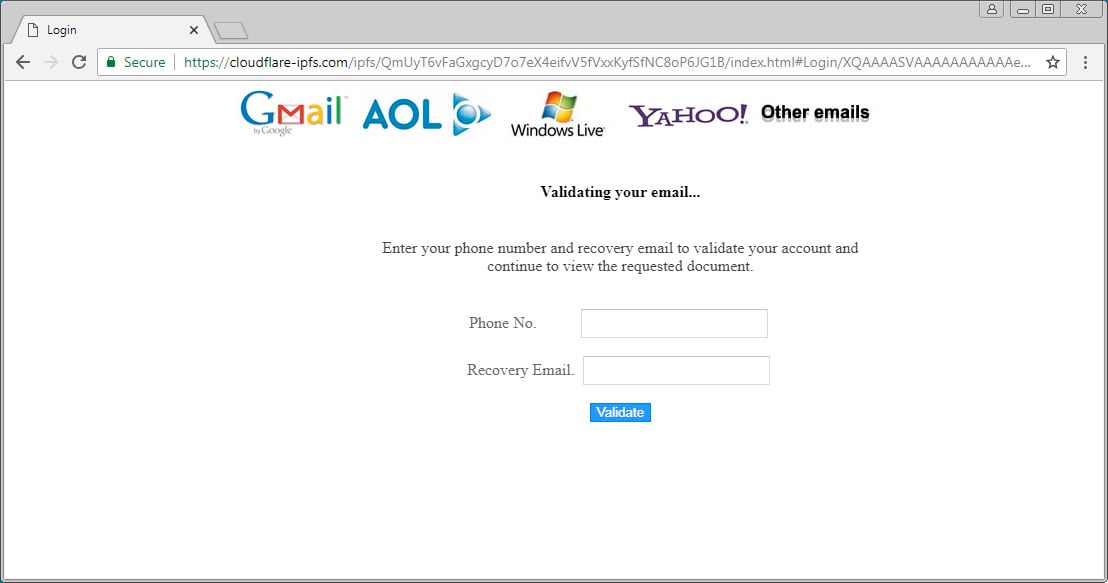

From Cloudflare's POV, just as in the case of 8chan, it is an issue of liability and public image. When an HTTP gateway serves IPFS content the browser is clueless as to what the true origin of the content is...all it sees is the fact that the content is being served from a particular domain e.g. consider the screenshot below

HTML forms hosted on IPFS and accessed via Cloudfare's gateway appear to have an SSL certificate signed by a reputable authority, even when those forms are actually part of a phishing scheme.

Obviously, a company like Cloudflare isn't keen on having malware, phishing schemes, et.al served from one of its official domains, regardless of whether or not their solution goes against the ethos of decentralized storage networks like IPFS. For Cloudflare safety of their users and (most importantly) protection of their corporate brand and image is of paramount importance.

It seems clear that the IPFS community and decentralized storage providers in general cannot rely on HTTP gateway operators like Cloudflare to provide content .moderation that respects principles of decentralization.

Introducing maude

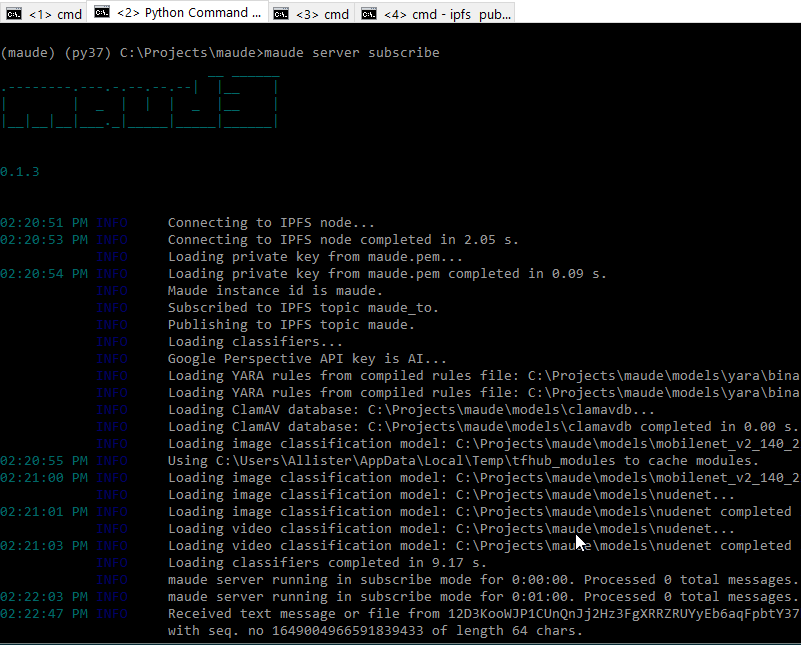

maude server running in subscribe mode analyzing user generated text sent over IPFS pubsub

maude server running in subscribe mode analyzing user generated text sent over IPFS pubsub

maude is an autonomous decentralized moderation system for IPFS and Web3.

maude is an attempt to solve the set of constraints introduced by the requirements of automated content moderation powered by machine learning for decentralized storage services like IPFS that can operate without a centralized decision-making authority.

maude is a Python program has 2 primary modes of operation:

- Monitoring

- Subscription.

Monitoring

The maude daemon in monitor modes run locally on an IPFS node and monitors the files or directory CIDs that are locally pinned:

maude uses ML models like nfsw_model to analyze the content of file-types it recognizes like images and videos::

The ML models classify the image, video, or content using attributes like nfsw, sexy, porn etc.:

Subscription

In subscription mode, maude subscribes to IPFS topics that act as channels that decentralized apps like chat apps or social media platforms can send user-generated text content like chat messages, blog posts, forum posts etc. for analysis and moderation. maude uses NLP models or services like Google's Perspective API that classify text according to attributes like profanity, sexually explicit language, toxicity, identity attacks and can identify hate speech or incitements to violence.

In both monitoring and subscription modes, maude publishes the data it has gathered via IPFS pubsub message queues as moderation feeds which contain all the information about a particular file CID pinned locally by a node, or about an identifiable user-generated message or post that maude was able to gather. Other nodes or network apps or browsers or users can subscribe to these moderation feeds and make decisions to ignore or filter or block this CID or content when they encounter it.

maude instances are designed to work collaboratively in an open trust network. Each maude instance has a public-key/private-key pair and each CID analysis is signed and constitues a claim by the instance for the CID. Each claim can itself be the target of ranking by human or automated moderators or observers on how accurate and useful the claim is. Each maude instance thus builds up a reputation feed with a ranking of the moderation assertions and claims made and this reputation feed can be used by network apps or users to automate deciding which maude moderation instances are reliable or unbiased.

With this system it is up to the individual IPFS node operators or IPFS end users to decide which moderation feeds to use to implement blacklists or filtering of IPFS content. IPFS blocks are immutable but individual nodes may choose to make CIDs determined to contain CSAM or revenge porn or doxxing or other abusive and illegal content harder to discover and access, by not locally pinning those blocks. End-user tools like browsers and browser extensions can automatically block and filter CIDs on based on trustworthy maude moderation feeds, but that decision is ultimately up to the user. Filtering and blocking of IPFS content can be thus done voluntarily and an in a decentralized manner giving the maximum amount of decision-making and control to individual operators and users while still retaining the advantages and scalability of ML-powered automated content moderation for web3 apps and platforms.

maude can use technology like Microsoft's PhotoDNA via libraries like PyPhotoDNA and Facebook's Threat Exchange via their Python client to identify images or other content involving CSMA and other types of illegal or dangerous content. Another moderation technique that will be trialled in maude is what can be called (for lack of a better term) Mod2Vec: creating sentences out of raw classification data for images or videos e.g. `This is a very large image with a small amount of nudity and several faces visible' and then using NLU models and libraries like Gensim to classify and group these sentences using existing word vectors which provides a way to incorporate semantic information into automated moderation decisions.